gsoc_journey.update({“Chapter 2”: [“First Evaluations”, “Google Foobar?”]})

Evaluation, a dreaded 10-letter word(just counted the number of letters😅) that brings stress and anxiety in everyone from a small kid to a golden-ager(sounds so much better than ‘old-person’ doesn’t it!!). The Cambridge dictionary defines evaluation (noun), ɪˌvæl.juˈeɪ.ʃən, as “ the process of judging or calculating the quality, importance, amount, or value of something”.

Evaluations bring the best or worst out of people. I work better under pressure, I am one of those guys who cannot work until the room is on fire😂(I have a better phrase but it doesn’t quite qualify as PG-13😜).

Evaluations although simple(the procedure) in GSoC are a big deal as they determine if you get (cue the Heavenly choir sound effect) the monthly stipend🤑, just kidding, they determine if the student will be allowed to continue their projects. There are three main evaluations, one after each month to quantify if the project achieves the goals that it intended to and if the time was utilized most efficiently for the greatest possible impact.

Now I understand procedural information is pretty boring so let’s directly jump to the fun stuff.

The Google FooBar Challenege

It happened at around 9:00 am on 9th of June, I had pulled an all-nighter and was half-asleep, finding ways to optimize stingray, when on typing _________ my browser window pivoted, the above image unfolded, my eyes widened, heartbeat skyrocketed, I looked in awe at my screen and then blinked 100 times, washed my face to truly confirm what I was seeing was true.

It was the GOOGLE FOOBAR CHALLENGE!

The FooBar challenge is a coveted, hidden challenge, a special invitation that is only sent to a selected few(makes me feel like James Bond😜). FooBar is Google’s secret hiring challenge. Google uses this to hire some of the best developers around the globe which they think can be a good match for their organization.

Google sends an invitation based on one’s search history and problem-solving related keyword searches. If hidden magic in the Google search algorithm chooses you, you may receive an invitation for Google Foobar(makes me feel like Harry Potter😂).

Curious developers are known to seek interesting problems. Solve one from Google?

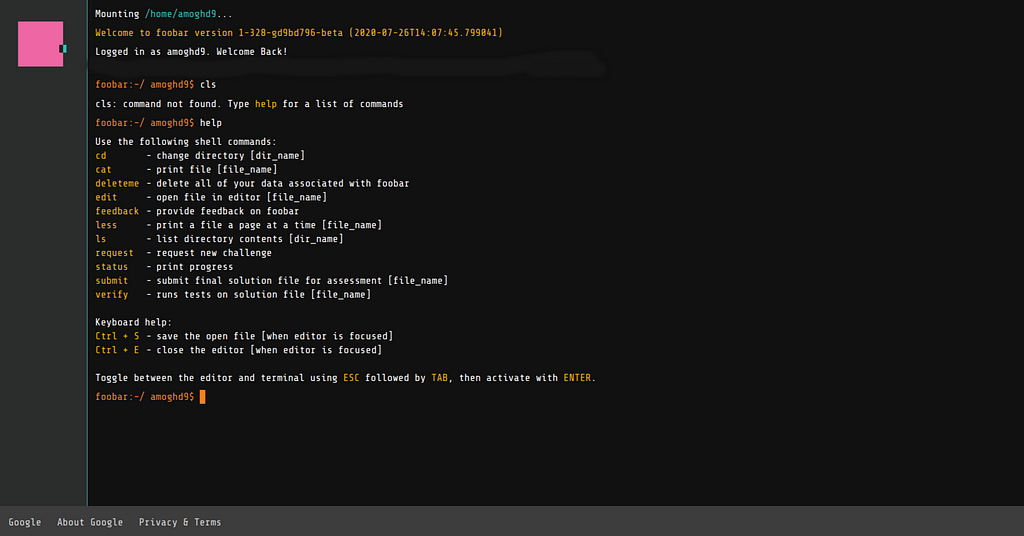

Without thinking twice I instantly clicked ‘I want to play’. A black screen with a command-line interface greeted me.

The FooBar challenge is essentially an online, time-bound Competitive Programming(CP) challenge with 5 rounds of increasing difficulty, each round having a different number of questions.

To spice things up (both in terms of interest and difficulty) Google has a storyline, progressing through the challenges you are asked for a friend who you’d like to refer for the challenge and eventually some personal details.

FooBar proved to be a great learning experience for me as I’m not proficient in CP. I felt that the main aim was not to test the skills and knowledge of an individual but also how quickly they could learn and implement solutions.

To learn more about FooBar you could read this article.

First GSoC Evaluation

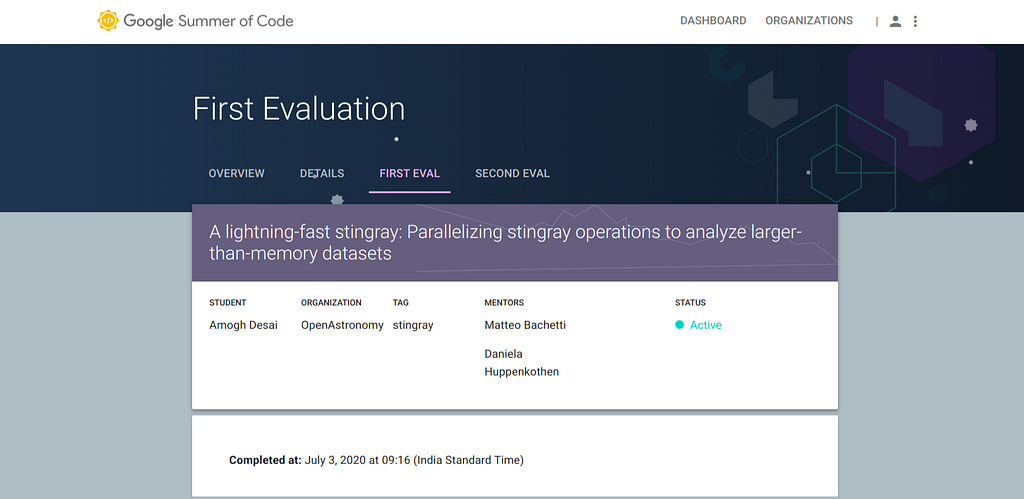

The first evaluations were scheduled from the June 29, 2020 — July 3, 2020. My mentor Matteo and I had finalized on two main deliverables crucial for the foundations of the project.

1. A benchmark analysis report containing the time and memory benchmarks, profiling results for all the 31 functions(3000+ lines of code)in the Lightcurve, Powerspectrum, Crossspectrum, AveragedPowerspectrum and AveragedCrossspectrum classes.

2. Integrating airspeed velocity, a tool for benchmarking Python packages over their lifetime into stingray.

The benchmark analysis report was a very time consuming yet possibly the most important step of the project. It singled out the functions that were causing the most delay and provided an overall estimate of the performance of stingray.

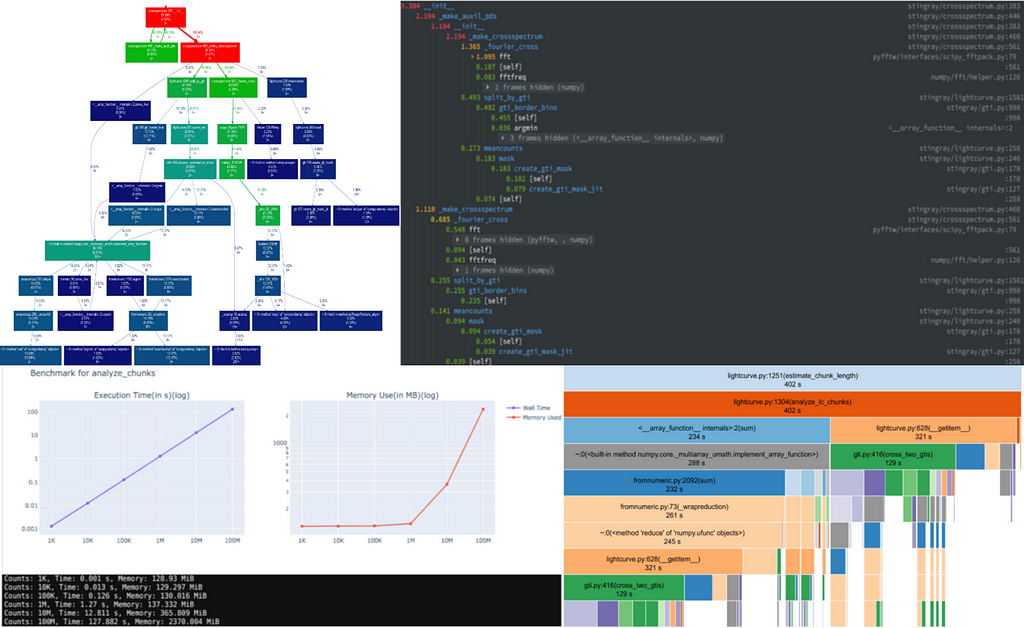

Benchmarking was performed in jupyter-notebooks. Data points of sizes ranging from 1,000(1K) to 100,000,000(100M) were used for benchmarking. For basic benchmarking, the %timeit and %memit magic commands were used. Two profilers Pyinstrument and CProfile were used to profile time and filprofiler was used to profile memory use.

The results of the CProfile were saved as a .pstats file which was then visualized using Snakeviz and gprof2dot in the form of a graphical viewer and call-graph respectively.

The results of the Pyinstrument and filprofiler were saved as HTML files.

The results of the %timeit and %memit magic commands was visualized in an interactive Plotly graph in the jupyter-notebook itself and also saved as an HTML file for easier access.

The result of all of this was 535 files ready for analysis, compiled into a 74-page document, 9 new GitHub issues and 3 new Pull Requests(for now, more to come soon).

The functions causing the main slowdown i.e. check_lightcurve, counts_err, cross_two_gtis, sort_counts, join, fourier_cross, rebin_data, rms_error, gti_border_bins, check_gtis, operation_with_other_lc and p_multitrial_from_single_trial were identified. These were further analyzed line-wise for deeper insights(also included in the document).

airspeed velocity is a powerful tool that helps track the performance of a project over time, commit after commit. It is essentially a set of tests that can be run after every commit/change to check if the performance has been altered. It is used by numpy, scipy and astropy. The documentation is very well written(sighs with relief).

Thus, integrating airspeed velocity was comparatively easier.

A brief personal submission

The thing I enjoy the most about GSoC is that just like most of you reading this article, I didn’t know, rather hadn’t even heard the names of all the tools I used and am probably adept at right now.

I start working every day not knowing how to solve the task(often daunting) in front of me or what the day holds. But at the end of the day, I’m confident that I’ve learnt something new.

This excites me and I look forward to every new day working on my GSoC project

You can check out my repository for airspeed velocity below. There is a link on the right side that leads to a webpage which looks relatively empty at the moment, someday hopefully, it will look like numpy’s asv page.

You can also check the benchmarks I have performed below.

Benchmarking Stingray -> GSoC 2020 by theand9 · Pull Request #477 · StingraySoftware/stingray

Thank you soo much for giving it a read. Please comment and leave a clap if you liked the article. Feel free to reach out to me on Linkedin.

Have an amazing day!! You are awesome!!!