A Month into GSoC

It’s almost been a month since the start of GSoC’s coding period and the work, I’m glad to write, is progressing at a steady and satisfactory rate.

The Developments

The last time around, my first ever not-so-meaningless contribution to open-source had just got merged, and I was really happy about it. But what that also did was, get me over the initial anxiety and intimidation I might have been feeling towards open-source. This, I think, has also helped speed things along.

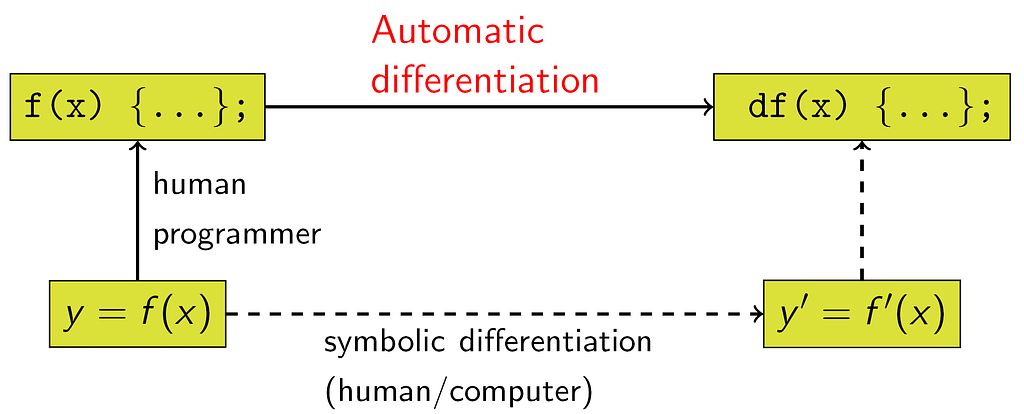

While I started working on the optional features of my project around 2 weeks ago, I had to scrape the initial implementation because it turned out to be very, very slow and therefore had to be completely replaced with a better and more efficient approach, which was a bit less straightforward. But now, two weeks into experimenting and iterating, a new pull-request has been opened with the newly implemented efficient version of the feature, and while it's still a few minor commits away from its final form, the core functionality works as expected and, if everything goes as expected, which is never a guarantee, a hefty part of my proposal’s objectives will be complete.